When someone suggested we build a “conversation bot” for the Badminton Horse Trials, so that eventing fans could get live results, news and interviews from the event, we originally thought “why?”, then “why not?”, and then “how?”.

It’s been quite a learning curve to understand how to build a natural sounding, entertaining and useful service for Badminton. For those starting out with building chat bots, voice interfaces, or similar using the latest consumer platforms, here are some of the things we’ve learned.

Let’s talk about the state of chat

You’ve heard of Siri, right? The smartphone feature that never really works...

In recent years, major tech companies have been trying to persuade us to use voice and chat more and more. Apple’s Siri, OK Google, Amazon’s FireTV, Facebook Messenger, Slack, Skype, Samsung’s S Voice and now Bixby. It seems we can’t escape the move towards voice- or chat-based artificial intelligence assistants.

Thankfully, they’re becoming more intelligent and less artificial. With recent upgrades to Siri, and with the launch of Amazon’s Echo and Google home, speech recognition and learning is improving rapidly. You can now easily take control of your phone, music system, TV, lighting, HVAC, and refrigerator (yes, really!). “OK Google bring the Tesla round to the front door” is no longer a pipe-dream (owning a Tesla still is).

Voice interfaces are here to stay, and businesses are starting to think about how to make use of the technology to improve how they engage with their audiences. Everyone wants to have great conversations with their customers, but putting real people on the end of a phone or chat window can be expensive. With artificial intelligence technology, real-time, interactive, conversational interactions with customers are now massively scalable at a fraction of the traditional cost.

Old friends, new technology

Having worked with Badminton Horse Trials on a number of exciting web projects, we know that they are willing to try a new idea if it will help to open up eventing to a wider audience.

When we met up with our old friend, Dominic Sancto (a voice interface expert from the days when voice interface was a brand new thing), the conversation naturally turned to, errr.. conversations, and what we might be able to do for Badminton.

With Dominic’s expertise and our technology stack, we approached Badminton with an idea: we’d build an Amazon Echo skill which would provide real-time results and information during the live five-day event.

They were impressed, and gave us the green light to produce a prototype.

Designing the voice interface & flow

The first step was to design the voice interface and user journey. As with any website or software design, you need to know what your users are trying to achieve, and make it as easy as possible for them to get where they need to be.

The key to a great conversation is that it is free-flowing, and so right from the start we wanted to build an application that felt natural and interactive rather than sounding like an FAQ. In the case of a voice interface, this means thinking about what the users might want to do (“intents”), questions they might ask (“utterances”), with what variations (“entities”), what responses we could offer, and what the user might want to do next.

Intent (what the user wants): “Give me results”

Utterance (what the user says): “Where did {Rider} come in the Dressage?”

Entity (variable data): {Rider}

Entity options: Mark Todd, Izzy Taylor, Michael Jung, etc...

Dominic produced a very wide range of utterances and entities, and we spent a long time getting the conversational flow right. In the end, we have over a dozen intents, over one hundred utterances with thousands of entity variations, and a great conversational feel:

The technology

As this was our first foray into a voice interface for Badminton, we wanted to understand the impending technology challenges early without spending too long on the detail. For us, this meant getting a disposable prototype up and running quickly, so that we could test the end-to-end concept and then iron out any problems.

Amazon provide some excellent developer tools and tutorials, including free “serverless” hosting using their Lambda system. This supports Java, NodeJS and Python and so to get started quickly, we chose Python for our prototype. After a few hours we had a working demo and a better understanding of the flow of data between the user, Amazon, and our system.

With the prototype running, we then had some technical choices to make. Building upon the slightly shonky Python prototype would be OK for a while, but Badminton is an internationally renowned sporting event, and although Amazon’s Echo device is only just starting out, we knew that there was a huge opportunity for us if we got it right. Not only for the Echo, but for Facebook Messenger, Google Home - due to launch a few months later - and Apple’s new “Siri on Steroids” which is due any time soon. And not only for Badminton, but for a very wide range of other businesses who are starting to dip their toes into these new platforms. This was a real business opportunity and so we had to build a solid solution on a scalable architecture.

Our technology of choice at the moment is PHP. Not because we love PHP, but because we love Drupal and WordPress. About 80% of the projects we work on are based on Drupal or WordPress, and we find their performance, flexibility, documentation and amazing community to be a huge benefit when planning, building and supporting much of what we do. As Amazon’s Lambda service doesn’t support PHP yet, and because we already have a great hosting platform of our own, we chose to develop the “version 1” Badminton application using our own PHP-Linux stack.

Chatbots are not about technology, they’re about conversations

We knew right away that if we wanted the conversations to feel natural, we needed great content, and our voice experts needed to be able to manage the conversation in an easy and sustainable way. Our first prototype supported only a limited number of utterances and responses, and to scale for Badminton, and to support other situations, we needed to provide our team with a content management system (CMS) so that they could create intents, responses and conversational flows without technical people “getting in the way”.

The CMS needed the following features

- Easy interface for editing responses

- Scalable to support unlimited Intents and entities

- High-performance so that we could sustain thousands of concurrent user conversations, and scalable to support unlimited intents, responses & entities

- Ability to translate inbound utterances into real Badminton data (“Toddy” is a nickname for “Mark Todd”), and to handle dates and days (“What’s happening tomorrow”, “How did he do in the Cross Country” could both map onto Saturday results)

- Session handling and data storage, so that we could build user profiles and “remember” our users

- Flexible APIs so that we could interact with Alexa, Google Home, Messenger, and any other voice interface device that comes up in future. Outbound integration capabilities to support data gathering from 3rd party sites

- Easy for our team to get started with, and powerful enough to deal with everything we haven’t thought of yet!

We researched a number of options, and found that there was no one solution that provided everything we needed. Since we’re already Drupal experts, and there is a free contributed Alexa module available for Drupal, we decided to use it as a basis for our very own “voice content management system” (VCMS).

How we developed and tested the Alexa skill using Drupal

We began developing in early March. With the live event happening in early May, we didn’t have long to build, test and deploy the skill! We quickly identified a large number of potential features we might want to add: live results, analysis, news, FAQs, rider interviews, travel directions and more, and broke them down into a series of small sprints. Our aim was to learn quickly, and get as far as we could before the event.

As the Badminton skill was all about live news, events, information and travel directions, we also integrated with their live results service (XML and CSV formats), Google Maps API (JSON), and Soundcloud (XML and audio). We integrated all of these using custom PHP, and combined with text and image data from Drupal to provide responses to the user. Session persistence and personalisation was handled by the Drupal storage system.

Testing the skill

Testing Alexa skills can be painful, as you have to actually talk to the device and wait for a response. Errors aren’t easy to diagnose, and if using the live Alexa service, there’s no easy way to debug your code. Developers want fast, repeatable tests that can be run from their own environment and don’t rely on third-party networks.

Since the Amazon Alexa APIs send and receive JSON data, we were able to quickly build a testing framework based on PHPUnit and JSON that could then call any of our intents with any utterances and entity values. PHPUnit allowed us to easily verify responses and we could run through 100+ tests in seconds.

Extending to work with other smart devices

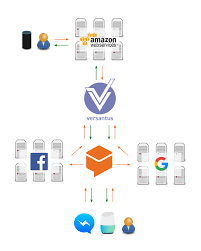

As well as the Echo skill, we wanted a solution for Badminton that could be used across Google Home, Facebook Messenger, Skype, SMS, Siri, and anything else that might be on the horizon. To allow this, we created our own API layer over the top of the excellent Alexa PHP library, allowing different inbound request types to be serviced by the same backend intent handlers.

With a few minor tweaks to the VCMS, we now had a system that would work consistently with any voice-enabled device, not just Echo. To test the theory, we tried developing a Slack bot using the the API.ai platform, and we were able to get this working within a couple of hours.

Once integrated into API.ai, adding Skype, Facebook Messenger, Google Home and half a dozen other integrations is trivial, meaning we can move from an Amazon Echo conversation to a “wherever the user is” conversation. Amazing!

We’ve now launched the Badminton Alexa Skill, along with one to deal with Echo’s Flash Briefing news reports, and one for Rider Interviews. Feedback has been great so far, with Hugh Thomas, the Event Director, saying:

“I was very impressed with the demo Dominic Sancto and Nik Roberts presented when they approached me about the idea, and the final outcome is fantastic and more natural sounding than I had expected.”

During the event the application automatically updated with latest results and news, but occasionally we wanted to tweak the responses to make them more relevant to what was happening on the ground. With the Drupal-based VCMS we developed, our editors could do this quickly and without any any technical involvement, meaning that users always got the most natural sounding responses, and the skill sounded more intelligent than it otherwise would have.

As the event has now happened, the application has switched to “results” mode, with most of the responses being focused on the results. We’ve already started developing the next version, with more intents and utterances, more intelligence, and more integrations with other voice platforms.

We’re looking forward to the future. The future’s already here.

Want to know more?

We work with a wide range of businesses, helping them achieve better results by investing in smarter technology. If you’d like to know more about our Voice Skills work with Amazon Echo, Badminton, or Drupal, or if you just want a chat about the latest technology and how it might help you, then call us on 01865 422112 or email hello@versantus.co.uk.